On January 24, 2024, we brought together policymakers, activists, human rights defenders, and academics from all over Europe for Privacy Camp 2024. We came together to explore the theme ‘Revealing, Rethinking, and Changing Systems’.

Whether it’s political systems or AI systems, they underpin everything in our world and in the field of digital rights. We can blame them, try to understand them, or maybe even reshape them.

In 2024, “Change” is the name of the game. With the European Parliament elections later this year and a new mandate for the European Commission, we spent the day thinking about new beginnings, how the old and new systems interact, and how we can leverage these moments for transformative change beyond 2024.

We welcomed more than 240 people in-person in Brussels and were joined by over 400 people online.

The 12th edition of Privacy Camp was organised by European Digital Rights (EDRi), in collaboration with our partners the Research Group on Law, Science, Technology & Society (LSTS) at Vrije Universiteit Brussel (VUB), Privacy Salon vzw, the Institute for European Studies (IEE) at Université Saint-Louis – Bruxelles, the Institute of Information Law (IViR) at University of Amsterdam and the Racism and Technology Center.

Read summaries of all the sessions at #PrivacyCamp24 below to get inspired about how we’re rethinking and reshaping privacy and digital rights systems going forward:

Contents

- Digital systems of exclusion: accessibility and equity in public services

- Health Data: From EU’s EHDS to England’s NHS National Data opt-out

- Down with Datacenters: developing critical policy for environmentaly sustainable tech in Europe

- Extractivism and Resistances at Europe’s frontlines

- Privacy is Big business: How Big Tech instrumentalizes PETs to expand its infrastructural power

- The growing infrastructure and business model behind (im)migration and surveillance technologies

- EDPS Summit

- Using reverse engineering and GDPR to support workers in the gig economy

- Alternative ‘intimate’ realities: Inclusive ways of framing intimate image based abuse (IIBA)

- Organising towards digital justice in Europe

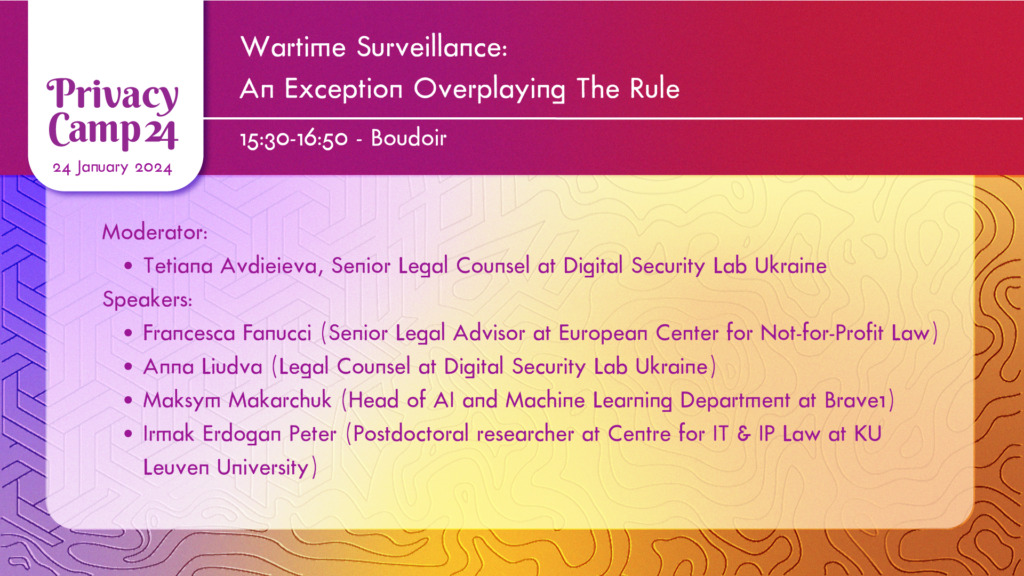

- Wartime surveillance: an exception overplaying the rule

- The missing piece: using collective action for digital rights protection

We loved bringing together so many people for a reflection on all things digital rights, and organising the event to be accessible even from the comfort of people’s homes. But putting together an event like Privacy Camp is no easy feat.If you enjoyed #PrivacyCamp24, help us organise more such events by supporting us with a donation.

BECOME A REGULAR SUPPORTER!

Digital systems of exclusion: accessibility and equity in public service

Session description | Session recording

One of the first sessions of the day addressed the increasing digitalisation of all aspects of daily life – including access to public services like health, education, social benefits, tax returns and political participation. This digitalisation of public services carries with it risks of surveillance, profiling, exclusion and privacy violations, especially for those most marginalised amongst us.

The moderator, Chloé Berthélémy, Senior Policy Advisor, EDRi, started the session by pointing out that while the technology is often framed as an efficient and practical solution for increasing access to public services, it often creates new barriers and exacerbates exclusion for those who are already excluded. Also, the integration of algorithms in public services becomes a method of social control, such as through surveillance technologies that target racialised communities.

Sergio Barranco Perez, Policy Officer at European Federation of National Organisations Working with the Homeless (FEANTSA), outlined several ways in which the digitalisation of public services affects homeless people. In some cases, more digitalisation can be advantageous – homeless people can more easily contact their social workers or look for accommodation. However, they have to overcome many challenges to do so. When public services are digitised without offline alternatives for marginalised people, it goes against the very principle of the establishment of such services, which is to guarantee equality of access to everyone.

Nawal Mustafa, a researcher from Vrije Universiteit Amsterdam, made a poignant observation that technology always advances faster than law, which has to catch up to mitigate the harms caused by emerging tech. Nawal explained the Dutch SyRI case as an example where a digital welfare fraud detection system (SyRI) ended up automating tracking and profiling of racialised and poor people.

Alex, Campaign officer at La Quadrature du Net, talked about the use of ‘risk profiling’ algorithms in French public administration. He revealed examination of the algorithm used by the family branch of the French welfare system (Caisse d’allocations familiales, CNAF), showed that the algorithm itself was discriminatory. The number of poor and racialised families unfairly targetted increased with the introduction of this system because the administration was able to ‘hide behind’ algorithms and took no responsibility for harms caused.

Rural and indigenous communities are another group impacted by increasingly digital-only public services. Karla Prudencio Ruiz, Board of Director at Rhizomatica and Advocacy Officer at Privacy International, made a critical point about the concept of bridging the digital divide: “Digital divide is a colonial concept. It separates those who are ‘connected’, seen as ‘advanced’ from those who are not ‘connected’. The second group are all lumped into one category and are seen as needing to be saved through digitisation”.

Health Data: From EU’s EHDS to England’s NHS National Data opt-out

Session description | Session recording

This session discussed the proposed European Health Data Space (EHDS) in light of the lessons learned from England’s implementation of the National Opt-Out system. Teodora Lalova-Spinks, Senior Researcher at KU Leuven’s Centre for IT & IP Law Network, provided an overview of the EHDS and its main rules, and questioned how the EHDS proposal would reconcile the rights and interests of individuals with those of society.

Nicola Hamilton, Head of Understanding Patient Data, covered a brief history of the national data opt-out in England and more recent information on the demographics of those who have been opting out. She also talked about recent developments, including the Federated Data Platform and Secure Data Environments, and how these have been received, with learnings that could be relevant to the development of the EHDS.

Benoît Marchal, Founder and Director of PicAps Association, highlighted the differences between ‘simple’ health data research and consent for clinical trials, particularly for people with rare diseases, and how opt-in models in the latter often work because of smaller, generally more supportive communities.

Francesco Vogelezang, Policy Advisor at the European Parliament, clarified the differences between opt-in and opt-out. He discussed the latest developments of the European Parliament’s position and mentioned challenges in reaching an agreement on data regulation across the EU.

Questions received concerned whether an opt-out system is truly giving people ownership and choice in an ethical way, and whether measures such as increased transparency through products like data usage reports could help improve trust.

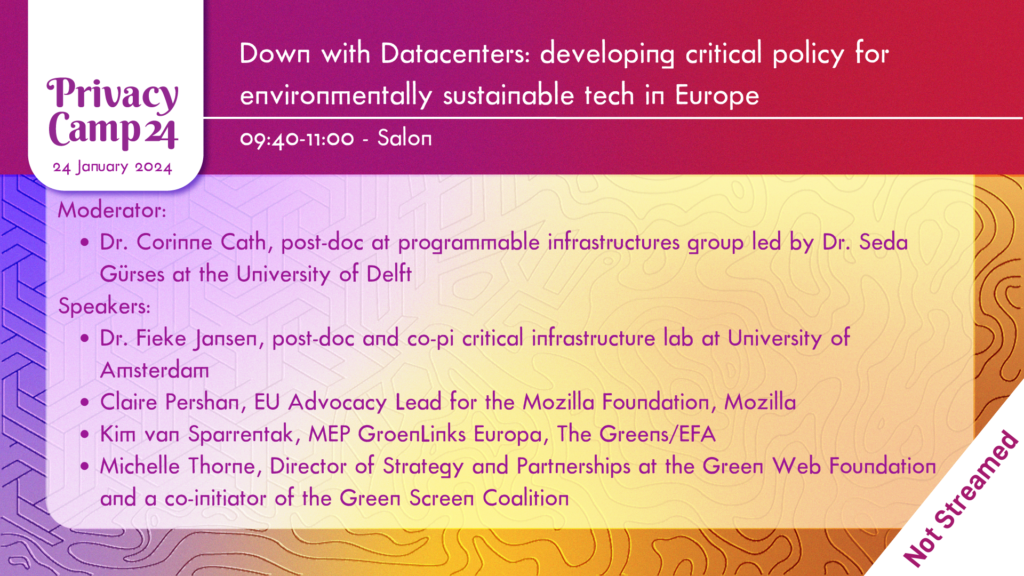

Down with Datacenters: developing critical policy for environmentally sustainable tech in Europe

Session description | Session not recorded

The panel started with an introduction by Dr. Corinne Cath, post-doc at programmable infrastructures group led by Dr. Seda Gürses at the University of Delft. She pointed that the topic of data canters needs to center a radical analysis of their impact, and go beyond improving their efficiency. Dr. Cath then flagged that most corporations behind data centers are not only social media platforms but go beyond this function – for example Amazon – and regulation lacks ambition and is often too business friendly.

After the introduction, Claire Pershan, EU Advocacy Lead for the Mozilla Foundation, highlighted the challenges posed by generative AI and cloud-based models, particularly their environmental impact. The main issue is the lack of transparency regarding carbon emissions, exacerbated by companies hiding such data. She emphasised the need to separate discussions on scale and efficiency, challenging the prevailing “bigger is better” mentality. The winner-takes-all dynamic in the tech industry leads to inefficiencies and neglects citizens and the climate.

Michelle Thorne, Director of Strategy and Partnerships at the Green Web Foundation, discussed the environmental impact of the internet, surpassing that of air and ship industries. Thorne advocated for a transition away from fossil fuels and ensuring a just social foundation for a more sustainable online ecosystem.

Dr. Fieke Jansen, post-doc and co-pilot at the Critical Infrastructure Lab at the University of Amsterdam, pointed at intersectionality of issues of digital infrastructure, copper, silicone mining but also topics that do not receive enough attention such as land and water, and surveillance. Dr. Jansen emphasised the governance challenges, citing conflicts arising from companies relocating data centers and the need for collective decision-making.

After the initial remarks, the audience split into four workshop groups on 1) the political economy of data centers, 2) existing regulation, (3) environmental, human, and social costs and 4) direct action steps.

Extractivism and Resistances at Europe’s frontlines

Session description | Session recording

This panel challenged the twin transition’s extractivist foundation. It focused particularly on the raw materials discussions in the EU (Diego Marin, Policy Advisor at European Environmental Bureau) and anti-mining community mobilisations in Serbia (Nina Djukanović, Doctoral Researcher, University of Oxford) and Portugal (Mariana Riquito Ribeiro, Doctoral Researcher, University of Amsterdam).

Maximilian Jung, Coordinator from Bits & Bäume, started setting the context of the discussion, pointing to the EU’s attempt to “marry” the green extractivism and sustainability, without compromising on economic growth. He set the discussion by pointing at the immense speed with which the critical raw materials act has been pushed ahead and opened the discussion around why green extractivism is a myth and how does the twin transition is failing people and the planet.

Nina described the case of RioTinto in Serbia – a lithium mining project opposed by a huge community of activists. Protests started in 2021, and in 2022 the project was canceled. However, due to Serbia’s EU ascension road, the country’s president Vucic is ready to re-open the discussions. Mariana presented the case of Northern Portugal, where civil society has been protesting the building of open mines in the name of the twin transition since 2018. Last year, land grabbing and aggression on the sites increased, with many suffering from physical violence.

Diego pointed at the importance of focusing on materiality of technologies and the geopolitical context. He said that with critical materials, the mining is an issue, but the biggest issue is the waste produced. Taking as an example the case of Cajamarga – Peru, Diego pointed that critical materials mining waste produces acid mine-drainage (low PH levels in waste water, due to Copper and other minerals, water goes red), impacting drinking water, with the regions showing the highest rates of cancer.

Finally, Madhuri Karak, reseach consultant on climate justice, provided an overview of the research she was commissioned to do on behalf of EDRi. Highlights included the need to expand the conversation beyond twin transition and digitalisation towards a larger agenda: defense industry, space exploration, etc. Moreover, she pointed to the important work of the coalition of 50+ NGOs advocating around the EU raw materials act.

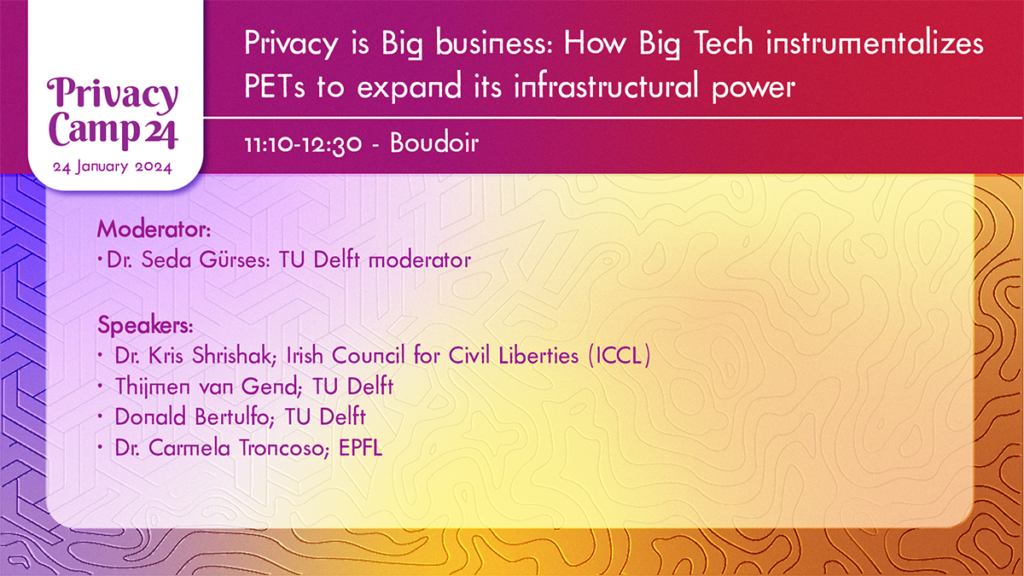

Privacy is Big business: How Big Tech instrumentalizes PETs to expand its infrastructural power

Session description | Session recording

The panel aimed to demonstrate how Privacy Enhancing Technologies (PETs) are being instrumentalised by large tech companies to grow their market and infrastructural power.

Seda Gürses, Associate Professor at TU Delft, set the stage for the panel by juxtaposing what PETs ought to be doing and where it is being taken by the business of computing. According to her, PETs aim beyond protecting individuals by guaranteeing data confidentiality. They also aim to limit power asymmetries due to the accumulation of data in the hands of powerful players. They do this by introducing mechanisms to limit collection and processing of data (minimisation) to a purpose. In doing so, PETs also come to obstruct the current extractive logic of digital systems that live from the continuous redesign and optimization of services for the pursuit of revenues. However, PETs were conceived before the rise of current computational infrastructures (i.e., cloud plus end-devices, the latter increasingly rendered accessories of the cloud) that have become concentrated in the hands of large tech companies like Amazon, Microsoft, Google and Apple. These companies have proven that they have the capacity to introduce PETs to billions of users in a way that may provide some individual protections (such as data confidentiality), an impressive feat. However, they often do so devoid of purpose limitation, or worse, in a way that instrumentalises PETs to entrench their infrastructural control, potentially to the detriment of competitors or state actors.

Kris Shrishak, Enforce Senior Fellow at the Irish Council for Civil Liberties, discussed how the Google Privacy Sandbox, while appearing to protect the privacy of browser users, transforms Google’s business relationship with online advertisers in a way that ultimately benefits Google. Next, Thijmen van Gend and Donald Jay Bertulfo, from TU Delft, explained how Amazon Sidewalk, a crowdsourced, privacy-preserving networking service that purports to provide connectivity to IoT devices, attaches these devices (and by extension, their manufacturers and developers) to Amazon’s cloud infrastructure.

Lastly, drawing from first-hand experience, Carmela Troncoso, Associate Professor at EPFL, talked about the technical challenges she and her team encountered in creating a privacy-preserving COVID exposure notification protocol (DP3T), which eventually was taken over by Google and Apple in what later became known as the Google and Apple Exposure Notification (GAEN). The cases demonstrated how large tech companies can leverage PETs to change the stakes of economic competition in certain industries (e.g., online advertising), to create new production environments that they can manage/optimise, and to implement or reconfigure state-level policies at their own discretion.

The audience raised questions about the impact of the growing market and infrastructural power of large tech companies on democracies, industrial policy and antitrust and privacy-related activism. During the open discussion, panelists stressed the need to sit with the problem and think critically on ways by which digital rights and privacy activism can help examine and critique the growing market and infrastructural power of large tech companies.

The growing infrastructure and business model behind (im)migration and surveillance technologies

Session description | Session not recorded

The discussion focused on worldwide surveillance, travel surveillance, GPS tagging for migrants, and strategic litigation news from the UK High Court.

Chris Jones, director of EDRi member Statewatch, outlined several key problems with global surveillance. He highlighted how surveillance, often framed as a security measure, is part of a broader effort to fortify state borders using digital technology, leading to exclusionary practices. Jones also emphasised how surveillance technologies frequently encroach upon fundamental rights such as privacy, data protection, and freedom of movement and expression. The extensive sharing of data across borders exacerbates these concerns, with insufficient safeguards in place. Moreover, he pointed out the lack of recourse for individuals affected by surveillance measures, particularly non-citizens who face discriminatory treatment, such as being placed on the US no-fly list without adequate avenues for redress. Additionally, Jones criticised the undemocratic nature of many surveillance projects, which operate without proper oversight and accountability mechanisms, perpetuating unequal power dynamics.

Investigative journalist Caitlin Chandler spoke about her work on travel surveillance and shared a case study. She explained that now when you fly, the information shared includes luggage, meal preferences, others you travel with, address, email, etc. As a result, an entire psychological profile of you is created. What we face now is governments pushing to expand this travel surveillance to track when you take a bus, train, ship etc.

In a poignant illustration, Chandler highlighted the dire consequences faced by individuals placed on travel surveillance lists. She recounted the case of a high-level Yemeni politician, referred to in the discussion as Hamid, who was wrongly flagged as a terrorist by the US and subsequently placed on the UN’s 1267 blacklist. Despite an investigation by the Yemeni Interior Ministry clearing him of any terrorist links, Hamid’s career and personal safety were jeopardized. Fearing reprisals from US drone strikes, he resigned from politics. Legal representation was scarce due to the risks associated with challenging such listings. However, with the assistance of lawyer Gavin Sullivan, Hamid successfully appealed his designation, although the process revealed the challenges of removing oneself from such lists once established. This case underscores the lack of recourse and accountability for non-US citizens subjected to erroneous surveillance measures.

Monish Bhatia, a Criminology lecturer at the University of York, provided an overview of GPS tagging in the UK, focusing on its application to migrants. Highlighting the intertwining of immigration law and crime control over the past two decades, Bhatia underscored the use of electronic monitoring in immigration enforcement, particularly through ankle monitors. Despite being initially perceived as a humane alternative to incarceration, the efficacy of electronic monitoring remains inconclusive in preventing crime. The transition to GPS devices in 2021 further expanded surveillance capabilities, raising ethical concerns and exacerbating the sense of constant scrutiny and confinement experienced by migrants. Moreover, the psychological toll of this monitoring often goes unrecognised by immigration authorities, compounding the challenges faced by individuals subjected to such measures.

Lucie Audibert, a Senior Lawyer & Legal Officer at EDRi member Privacy International, discussed the implications of GPS tagging in the UK, emphasising the escalating privacy concerns since its implementation. The surge in GPS tagging, evidenced by an 80% increase in tagged individuals, has raised alarm over constant surveillance and data collection. Privacy International’s litigation efforts aim to challenge the legality of GPS tagging, advocating for both the removal of tags from individuals and a comprehensive policy review. Despite being initially intended for foreign national offenders, GPS tracking now extends to asylum seekers, with no time limit on tagging. This pervasive surveillance infringes on fundamental rights, with data collection occurring every 30 seconds, leading to excessive monitoring and potential inaccuracies. Furthermore, the involvement of private companies in data collection and storage raises ethical concerns, especially regarding intrusive biometric monitoring. Overall, the efficacy and ethical implications of GPS tagging remain uncertain, despite substantial investment in the technology.

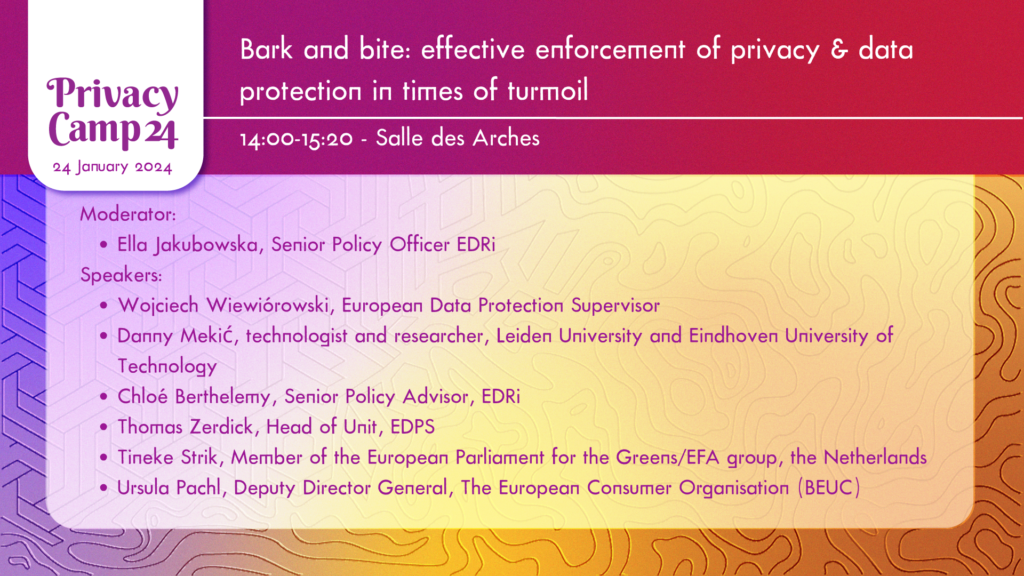

EDPS Summit

Session description| Session recording

The EDPS – Civil Society Summit was hosted at Privacy Camp for the sixth year. This session was introduced by Claire Fernandez, the Executive Director of EDRi, with opening remarks by the European Data Protection Supervisor (EDPS), Wojciech Wiewiórowski. He emphasised the importance of maintaining a connection with civil society organisations (CSOs). He highlighted that the EDPS serves as both supervisors and advisors in the legislative process, making privacy protection more manageable under data protection law.

Thomas Zerdick from EDPS shared their annual plans: finalising an investigation into the European Commission’s use of Microsoft 365 as well as taking legal action against the European Parliament and Council regarding Europol Regulation’s retroactive effect. Simona De Heer, representing the European Parliament for the Greens/EFA group, discussed the challenges migrants face within current political systems. Despite the bleak migration debate, she emphasised the effectiveness of democratic debate and civil society action, citing examples of investigative reports on Frontex and changes in leadership.

Ella Jakubowska, the moderator from European Digital Rights, highlighted EDRi’s efforts to combat the narrative that the EU needs “free flow of data” due to criminal activities. Instead, EDRi focuses on addressing economic and social injustices leading to the current situation. Ursula Pachl, Deputy Director General of The European Consumer Organisation (BEUC), discussed the New Deal in 2018 and the need to continue enforcement work, citing a consumer complaint against Meta’s pay or consent model. Pachl advocated for changing the enforcement landscape with collective redress in GDPR and similar laws. Danny Mekić, a technologist and researcher, shared concerns about privacy infringements in society and emphasised the need for discussions on the kind of society people want to live in, beyond technological implementation.

Using reverse engineering and GDPR to support workers in the gig economy

Session description| Session recording

The panel discussion, led by Simone Robutti of Reversing Works and Tech Workers Coalition, delved into the intricate challenges faced by tech workers amidst the rapidly evolving digital landscape. Justin Nogarede, representing FES Future of Work, set the stage by highlighting the multifaceted ways in which digital tools are exploited to displace workers, suppress their rights, and consolidate data in the hands of tech behemoths. His emphasis on the gig economy underscored the urgent need for collective data, such as salary scales, to empower workers in negotiations—an area where the General Data Protection Regulation (GDPR) can play a pivotal role.

Aida Ponce Del Castillo, from ETUI, advocated for leveraging GDPR as a shield for workers’ digital rights, citing successful precedents like the hefty fine imposed on H&M for breaching data privacy laws. She underscored the importance of labour unions embracing GDPR principles to combat systemic violations effectively. Sana Ahmad, a researcher at Helmut Schmidt University, shed light on the pernicious effects of surveillance on content moderators, whose work is fraught with psychological strain exacerbated by constant monitoring and the threat of punitive measures.

Cansu Safak, representing Worker Info Exchange, delved into the significance of data in uncovering patterns of exploitation, both at the individual and collective levels. She cited instances where workers were dismissed based on intrusive data collection, such as Uber’s controversial case where background data from a personal device was used to justify termination. Safak’s discussion extended to strategic litigation endeavours aimed at dismantling opaque corporate practices and advocating for workers’ rights in the legal arena.

The panellists collectively emphasised the imperative of multidisciplinary collaboration to effectively defend labour rights in the digital age. Audience inquiries explored the future of movement-building amidst rightward shifts in Europe, prompting discussions on localised organising efforts and the necessity of greater union support for marginalised gig workers. Nogarede underscored the urgency of unified civil society action to counter corporate exploitation and safeguard the rights of migrant workers, highlighting the critical role of the European Commission in this endeavour.

In essence, the panel discussion provided a comprehensive overview of the challenges confronting tech workers, underscored the potential of GDPR in protecting digital rights, and advocated for collaborative efforts to combat systemic exploitation in the labour market.

Alternative ‘intimate’ realities: Inclusive ways of framing intimate image based abuse (IIBA)

Session description | Session not recorded

This panel examined alternative ways to define intimate image abuse (IIBA) outside of the context of sexual images to create a more inclusive debate that centers the needs of marginalised communities.

Yigit Aydinalp from the European Sex Workers’ Rights Alliance talked about how sex workers frequently undergo IIBA yet find it hard to seek redress, not in the least because of how this abuse is defined in our laws and the criminalised approach to dealing with it. He stressed on three important things that must change about how we frame IIBA if we want to ensure marginalised groups – such as sex workers and racialised people – find justice:

- What is considered ‘intimate’ must be decided based on context rather than just as sexual images. For example, for many sex workers, their faces are more ‘intimate’ to them than images of their bodies because their faces make them vulnerable to violence from police and societal stigma.

- We need an inclusive definition of IIBA that recognises perpetrators as not just individuals but also States, the police and organisations.

- We need access to justice and wider service provisions that address problems of all victims. For example, the current redress mechanisms leave out marginalised groups who can’t access legal help or pyschological support due to stigma or residence/citizenship status

Anastasia Karagianni from DATAWO agreed that ‘intimacy’ is still defined by very white-cis standards. Even Europe’s directive on combatting violence against women adopted a limited definition of IIBA and proposed criminalisation-focused means of resisting it, rather than preventative or restorative methods. Karagianni also spoke about other forms of IIBA which are often not focused on enough, such as creepshots, upskirting and images taken in health-care settings. She emphasised on the need for an intersectional approach when framing IIBA.

EDRi’s Chloé Berthélémy and Itxaso Domínguez spoke about how technology helps sex workers with their work but also causes tremendous harms by facilitating and exacerbating effects of IIBA. Berthélémy also addressed the use of IIBA as a method of social control by law enforcement and states. Images can be very powerful in shaping a narrative and affecting the power struggle between people and the police. For example, bodycams are increasingly being used by the police to produce and disseminate powerful images that are often biased in the police’s favour.

Domínguez brought in the point of the scale of dehumanisation when we change context and IIBA can be considered as inflicted upon an entire community. Think of the dehumanising images of migrants all across media, capturing people without consent at one of the most vulnerable moments of their lives. We should also consider where mass surveillance falls when it comes to IIBA, because many of us are now being recorded in public without our consent, often as a method of social control.

Organising towards digital justice in Europe

Session description| Session recording

In one of the closing sessions of Privacy Camp 2024, panelists had an active discussion about the changing digital rights and justice ecosystem and put forward ideas for a vision for digital justice organising in Europe. This session looked themes of coloniality in the digital rights field and discussed the decolonising digital rights process co-led by EDRi and the Digital Freedom Fund (DFF).

When discussing what role technology plays in maintaining and serving colonial projects, climate and racial justice activist Myriam Douo brought up extractivism as an issue. Many materials that are embedded in our technology and driving digitisation come countries that are colonised for their resources. Douo also emphasised the need for the digital rights field and the climate movement to work together towards a solution for the climate crisis, rather than opting for ‘green capitalism’ which doesn’t resolve the root issues.

Sarah Chander, Senior Policy Advisor at EDRi, stressed that global crises like the climate crisis should be looked at with a decolonial, socio-economic historic lens because these challenges are a result of ongoing historical processes. Technology is still being produced in service of coloniality, as is evident from the use of surveillance tech by Israel to control Palestinians and carry out a genocide in Gaza.

Following up on this, Laurence Meyer, Racial and Social Justice Lead at DFF, gave some examples of how colonial frameworks are everywhere in tech and rule the digital rights field. Migration came up as a critical example. Migration and colonialism are keeping people captive, and States use technology as a tool to further police and control these vulnerable groups. So, any digital policy that does not consider the repercussions on migrants is going to be exclusionary and contribute to furthering the colonial project.

Luca Stevenson, Director of Programmes at the European Sex Workers’ Rights Association (ESWA), brought in the perspective of another marginalised and overlooked group in the digital rights field – sex workers. The digital rights of sex workers still feels like a fringe issue to the rest of the field, but it shouldn’t be. As Stevenson poignantly noted, if we aren’t taking into account the people most vulnerable to privacy violations and discrimination by large tech platforms, then we as a digital rights field are failing our mission.

Then, Chander outlined the three things that need to change in the digital rights field in Europe if we want to contribute to lasting systemic change:

- Broaden our understanding of what are ‘digital rights’ – not just privacy and data protection, larger systemic crises like climate, migration, racism are also digital rights issues.

- Change how the field operates – the field right now feels exclusionary to anyone who isn’t a technical expert, paternalist to those who want to be involved, and extractivist while working with other movements.

- Stop being reformist and resorting to tried-and-tested methodologies for achieving change. Instead, think of what will fundamentally change the current system.

Wartime surveillance: an exception overplaying the rule

Session description | Session recording

The session Wartime surveillance: an exception overplaying the rule, organised by Digital Security Lab Ukraine, focused on the future of AI-driven surveillance tools and their framing within the expected AI Act and old-but-gold GDPR.

The panel discussion united the representatives of Ukrainian and international NGOs, academia and the Ukrainian governmental cluster Brave1 with particular attention paid to recommendations for other states in similar context. To exemplify, the experts stressed on the need to collaborate and exchange valuable experience and expertise to ensure AI benefits humanity, develop a human-rights-oriented legal framework, which is applied not only domestically, but also addressing cross-border threats and challenges, as well as traditionally dwelling upon the necessity to make the consultation process multi-stakeholder and meaningful when designing legislative and policy solutions.

The missing piece: using collective action for digital rights protection

Session description | Session not recorded

The panel discussion, expertly moderated by Alexandra Giannopoulou, Project Coordinator at digiRISE and Digital Freedom Fund, delved into critical issues concerning digital welfare, exclusionary policies, and surveillance. Giannopoulou underscored the necessity of acknowledging collective interests and structural injustices that disproportionately affect young individuals.

The speakers brought diverse expertise to the table:

Francesca Episcopo, an EU private law professor at the University of Amsterdam, initiated discussions by examining the intricate relationship between law and structural injustices. She explored the legal system’s capacity to address various forms of injustice, proposing a nuanced analysis of legal means to combat these challenges. Emphasising the significance of fairness and transparency, Episcopo highlighted the pivotal role of these principles in legal approaches.

Elisa Parodi, a Doctoral fellow at SciencesPo, focusing on algorithmic discrimination, shed light on the limitations of the legal system in capturing algorithmic biases. Parodi discussed various types of biases and the formidable challenge of combating AI discrimination, particularly noting the gap in EU equality law regarding AI bias.

Oyidiya Oji, an Advocacy and Policy Advisor on Digital Rights at the European Network Against Racism, emphasised the importance of measuring the impact of research and implementing community-inclusive changes. She stressed the need for bridging gaps between CSOs and communities, and advocated for a critical examination of laws and their protective capabilities.

Kiran Chaudhuri, the Chief Legal Officer at the European Legal Support Centre, addressed the role of CSOs in tackling structural injustices. Chaudhuri highlighted disparities in the application of standards in international conflicts and underscored the significance of supporting grassroots movements. Additionally, he discussed the impact of digital environment issues such as account closures and censorship.

Throughout the discussion, several key points emerged:

- The challenge of utilising legal tools like GDPR to conceptualise and address digital harms.

- The pivotal role of concepts like fairness and transparency in combating social injustices.

- The difficulty of capturing algorithmic bias and discrimination within existing legal frameworks.

- The importance of community involvement and impact measurement in advocacy work.

- The potential for legal action to effect change, alongside the need for a multi-faceted approach including political and policy mobilisation.

- The utilisation of strategic litigation and incident monitoring as part of a broader collective effort.

During the Q&A session, Giannopoulou and the panellists talked about achieving a collective approach versus handling individual cases, the role of strategic litigation, the use of state litigation, and the legal instruments available for collective resistance.

In summary, the dialogue centred around the challenges and opportunities of employing collective action and legal tools to safeguard digital rights, with a keen focus on addressing structural injustices, algorithmic biases, and the key role of community involvement in driving effective change.

Thank you to this year’s Privacy Camp sponsors for their support! Reach out to us if you want to donate to #PrivacyCamp25.