The EU AI Act contemplates a risk-based approach to regulating AI systems, where: (i) AI systems that cause unacceptable risks are banned and prohibited from being placed on the market; (ii) AI systems that cause high risks can be placed on the market subject to mandatory requirements and conformity assessments; and (iii) AI systems that pose limited risks are subject to transparency obligations.

While this seems like an intuitive approach, current classifications of systems under each of these categories reveals a dangerously inconsistent schema within which fundamentally dubious technologies like emotion recognition are characterized as “limited risk”, and systems can only be classified as posing unacceptable risks if they meet unreasonable high thresholds and arbitrary standards, exposing individuals and communities to nefarious AI use cases.

There is an urgent need for civil society participants to:

- Come up with a collaborative strategy for more successfully setting red lines for technologies that should not be designed, developed, standardized or deployed in democratic societies.

- Ensure that the high thresholds and wide exceptions carved out for technologies posing “unacceptable risks” are revisited to ensure that they are more thoughtfully drafted to prioritise the protection of fundamental rights.

- Learn from experiences in non-Western jurisdictions, and from civil society actors working in jurisdictions beyond Europe.

Questions to be asked include: 1) What are the main hurdles on the path to establishing red lines, and does civil society currently have a viable action plan for overcoming them? 2) What successes and challenges have arguments that advance the imposition of red lines faced so far? 3) What are the best ways to reveal the inherent inconsistencies vis-a-vis risk classifications within the current proposal? 4) What key factors should civil society keep in mind while strategizing on an advocacy plan? 5) Given the global supply chain of AI technologies, what can we learn from experiences of jurisdictions around the world? 6) How can civil society build a compelling narrative in favour of imposing red lines on some AI applications?

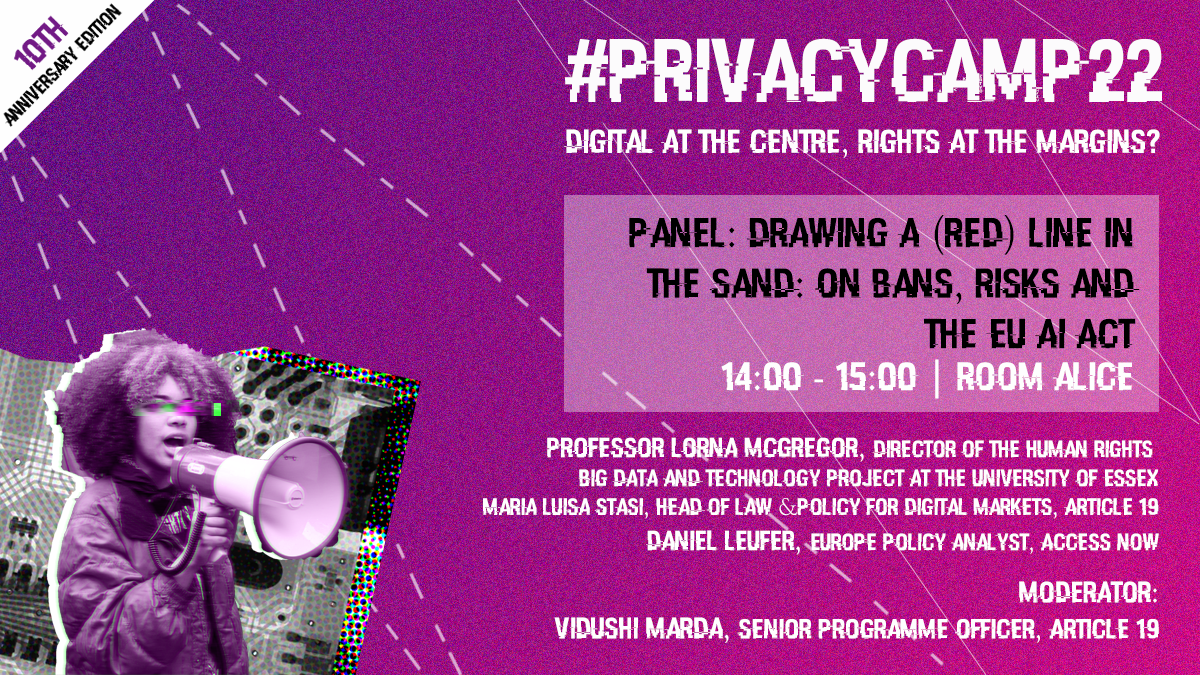

- Moderator: Vidushi Marda, Senior Programme Officer, ARTICLE 19

Speakers:

- Professor Lorna McGregor, Professor, Human Rights, Big Data and Technology Project at the University of Essex

- Daniel Leufer, Europe Policy Analyst, Access Now

- Maria Luisa Stasi, Head of Law and Policy for digital markets, ARTICLE 19

Check out the full programme here.

Registrations are open until 24 January, 2022 here.